LLM Web Interface

Chat interface for Groq LLMs with streaming & secure key management.

LLM Web Interface

A sleek and powerful web application I developed to provide a seamless interface for interacting with Large Language Models, specifically integrated with Groq's high-speed APIs.

This project was a great opportunity to explore real-time data streaming and robust full-stack development. It features a modern tech stack centered around React, Node.js, and MongoDB, with a strong focus on security and a dynamic user experience.

🚀 Key Features & Highlights

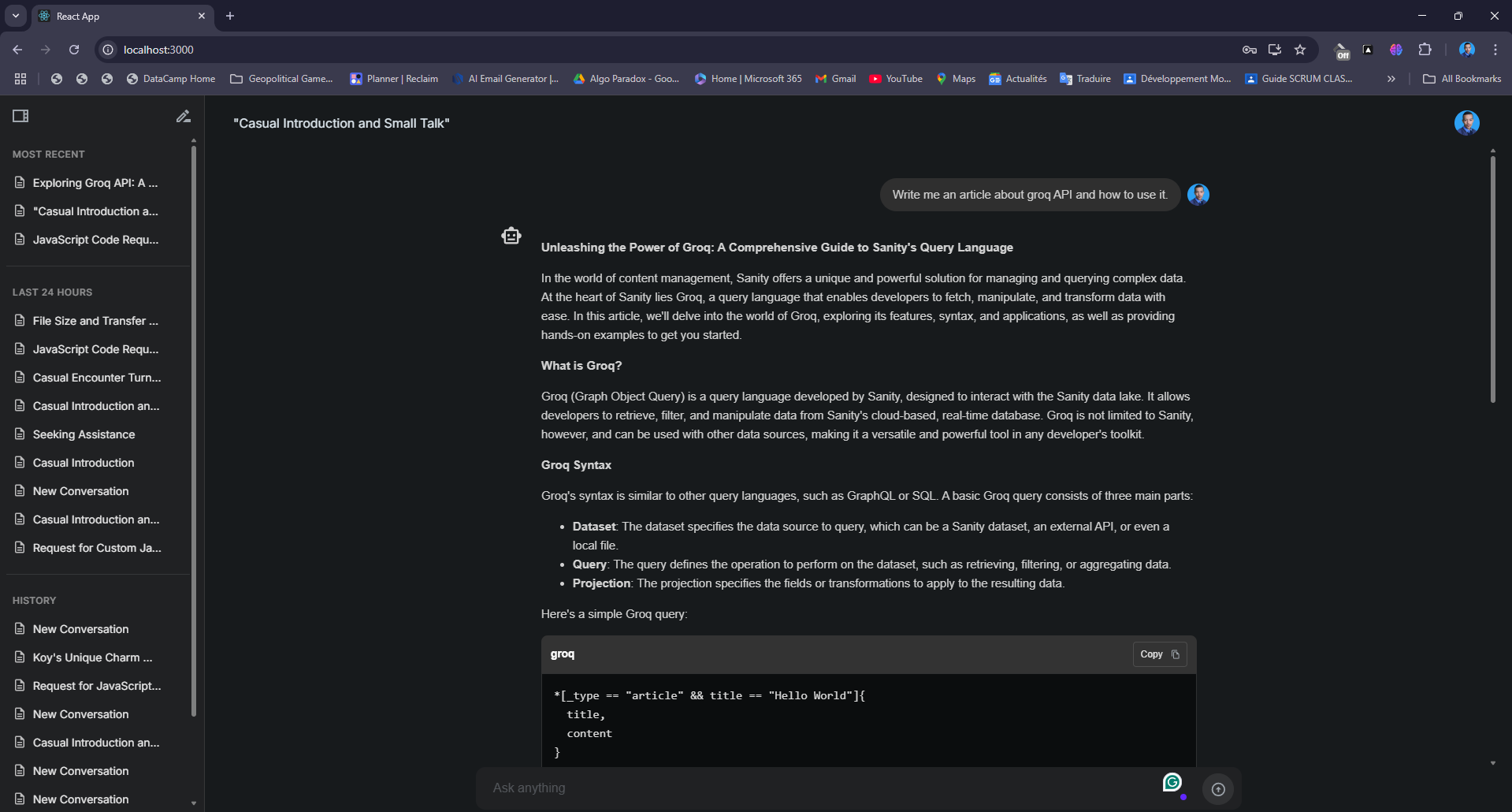

- Blazing-Fast Response Streaming: Utilizes server-sent events (SSE) to deliver a fluid, token-by-token response from the LLM, making the conversation feel instantaneous and natural.

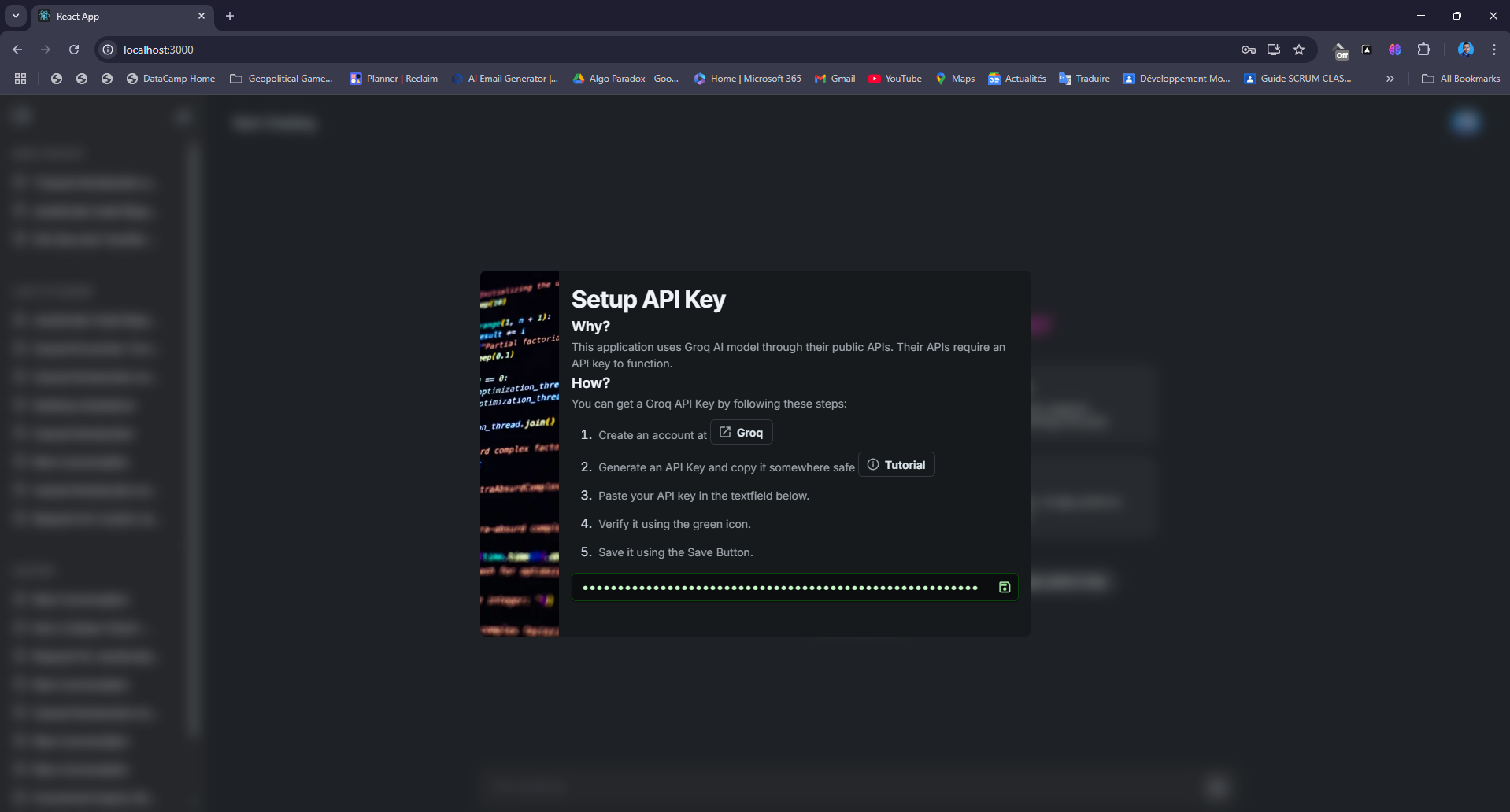

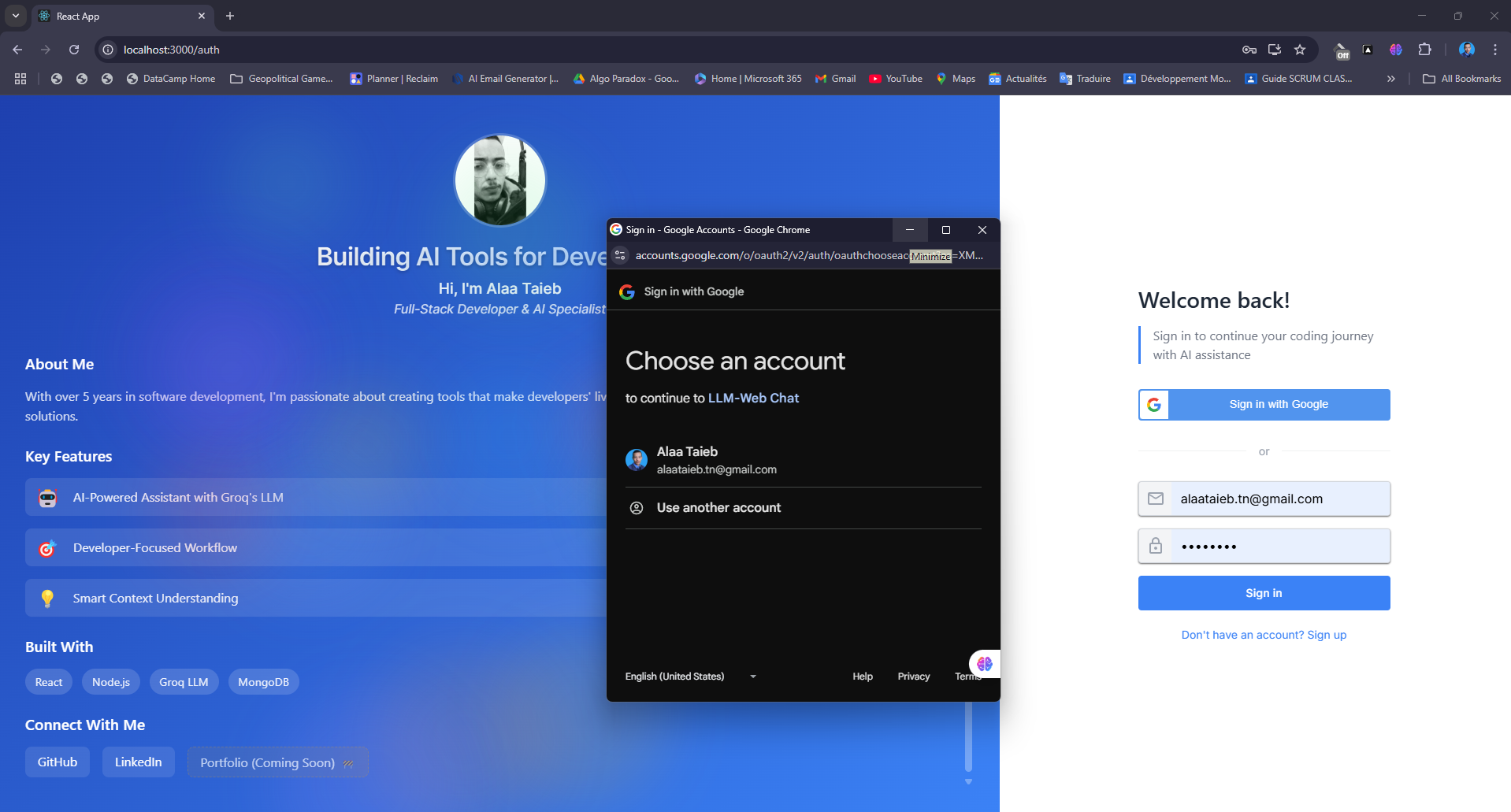

- Robust Security: Implements a custom, encrypted system for managing Groq API keys, ensuring user data is secure. This is complemented by a JWT authentication system with Google OAuth for hassle-free sign-in.

- Intuitive & Rich Interface: The chat interface supports full markdown rendering with syntax highlighting for code blocks, providing a clean and professional display of LLM responses.

- Modern Design: Built with Material UI (Joy), the application is fully responsive and adapts flawlessly across all devices.

💻 Technical Stack

The project is built on a robust MERN-like stack, with a clear separation between the frontend and backend.

Frontend

- React.js: Used with the Context API for efficient state management.

- Material UI Joy: Provides a beautiful and responsive component library.

- Real-time Streaming: Manages the incoming token stream from the backend.

Backend

- Node.js & Express.js: A RESTful API serves as the core of the application.

- MongoDB: The NoSQL database handles user and API key data.

- JWT & Google OAuth: Manages secure user authentication and sessions.

- Groq API: Handles all LLM interactions with streaming support.

🛠️ Project Architecture

This diagram illustrates how the different components of the application communicate to provide a seamless experience.

🔬 Technical Deep Dive

Real-time Message Streaming

Here's a glimpse into how the backend streams responses from the Groq API to the frontend.

const stream = await groq.chat.completions.create({ messages: apiMessages, model: "llama3-70b-8192", stream: true, }) for await (const chunk of stream) { const content = chunk.choices[0]?.delta?.content || "" // Logic to send content to the client via SSE }

Secure Key Encryption

The API key management system uses encryption to protect sensitive user data before it is stored in the database.

// A simplified example of the encryption process const encrypted = crypto.encrypt(apiKey) await ApiKey.create({ user: userId, key: encrypted, name: keyName, })

🚀 How to Run the Project

You can get this project up and running with a few simple commands.

-

Clone the repository:

git clone https://github.com/Alaa-Taieb/LLM-Web-Interface.git -

Install dependencies:

# Frontend cd client && npm install # Backend cd server && npm install -

Set up environment variables:

- Create a

.envfile in both theclientandserverdirectories with the variables listed in the original project description.

- Create a

-

Start the servers:

# Frontend cd client && npm start # Backend cd server && npm run dev